We have three types of the xml transformations and they are listed below:

XML Source Qualifier Transformation: You can add an XML Source Qualifier transformation to a mapping by dragging an XML source definition to the Mapping Designer workspace or by manually creating one.

We can link one XML source definition to one XML Source Qualifier transformation.

We cannot link ports from more than one group in an XML Source Qualifier transformation to ports in the same target transformation.

XML Parser Transformation: The XML Parser transformation lets you extract XML data from messaging systems, such as TIBCO or MQ Series, and from other sources, such as files or databases.

Used when we need to extract XML data from a TIBCO source and pass the data to relational targets.

The XML Parser transformation reads XML data from a single input port and writes data to one or more output ports.

XML Generator Transformation: The XML Generator transformation lets you read data from messaging systems, such as TIBCO and MQ Series, or from other sources, such as files or databases.

Used when we need to extract data from relational sources and passes XML data to targets.

The XML Generator transformation accepts data from multiple ports and writes XML through a single output port.

Stage 1 (Oracle to XML)

We are gonna generate an xml file as output with the oracle emp table as source.

Step 1: Generate the XML target file.

- Import the same emp table as source table

- Go the targets and click on import the XML definition.

- Later choose the Non XML source from the left hand pane.

- Move the emp table (source table) from all sources to the Selected Sources.

- After which, we got to click on open to have the target table in the target designer.

- Sequential Steps to generate the xml target table is shown in below snap shots.

Fig a: Importing the XML definition

Fig b: stage 1 for generating file just got to click on OK in this window.

Fig c: Here specify the name for the target table and click next.

Fig d: finally we got to click on Finish in this window.

Step 2: Design the mapping, connect the SQ straight away to the target table.

- Create the name of the mapping as per the naming convention.

- Save the changes.

Step 3: Create task and the work flow.

- Double click on the work flow and go to the mapping tab and here we got to specify the output file directory. (C :/) ….

- Run the work flow ,check in the C drive and look for an file by name emp.xml …

Stage 2 (XML to Oracle)

Here source is gonna be the xml file and the target file is the oracle file.

Step 1: Importing the source xml file and import the target transformation.

- Go the sources and click on the import XML definition.

- Browse for the emp.xml file and open the same.

- The first three windows are gonna be same as in previous case.

- Target table is gonna be the same EMP table.

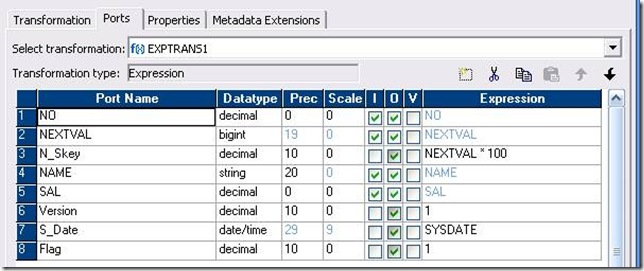

Step 2: Design the mapping.

- Connections for this mapping is gonna be the following way.

- Save the mapping.

Step 3: Create the task and work flow.

- Create the task and the work flow using the naming conventions.

- Go to the mappings tab and click on the Source on the left hand pane to specify the path for the input file.

Step 4: Preview the output on the target table.